The Science Of Sound

Back in the pioneering days of recording, a sound engineer’s uniform wasn’t the jeans and T-shirt we’d expect to see nowadays, but instead, a white lab coat. More than just dressing differently, though, this change in uniform over the years has mirrored a key shift in the methodology of recording – moving away from a […]

Back in the pioneering days of recording, a sound engineer’s uniform wasn’t the jeans and T-shirt we’d expect to see nowadays, but instead, a white lab coat. More than just dressing differently, though, this change in uniform over the years has mirrored a key shift in the methodology of recording – moving away from a scientific analytical approach to one that is more akin to a subjective creative process.

As you’d expect, this creative methodology has undoubtedly pushed forward the sound of music, as well as making an ‘art form’ of the process of recording, but have we also lost sight of some of the important scientific and technical aspects of recording? In short, did those engineers in lab coats actually have something important to offer?

In truth, the process of recording should always be a lively interplay between creative and scientific disciplines. However, while many of us will be familiar with the principles and theories behind sound, there often seems to be a disconnect between theory and practice – we might understand that a decibel measures amplitude, for example, but how does this directly impact on our daily workflow? This feature aims to bridge the gap between theory and practice – to highlight the important scientific theories of sound, but then make the direct link to the practices of recording. If you’re new to recording, therefore, it forms a perfect introduction to the rudiments of sound, while those with more experience will gain an enlightened view on practices and techniques they use on a daily basis.

D Is For Decibel

Our journey through the science of sound is principally focused on the key attributes and qualities of sound – aspects such as amplitude or frequency, for example, but also deeper issues like phase and, of course, auditory perception. As most of us will be aware, sound is formed by changes in the relative density of air molecules – the air ‘vibrating’, in other words. We can think of sound as radiating away from a point source (much like ripples on a pond), with the oscillations formed by the air molecules either being densely packed – compression, in other words – or more widely spaced in the form of rarefactions.

Of course, the compression and rarefaction of air molecules doesn’t mean much until our ears transform the acoustic energy moving though the air into something we can interpret as sound. In the case of our ears, the compressions and rarefactions physically vibrate our eardrums, sending electrical signals to the brain. In the same way, the diaphragm of a microphone is moved by the compressions and rarefactions, this time generating an electric current that can be used for the process of recording or, in this case, analysing the principal qualities of sound.

Feeding the output of the mic to an oscilloscope, we can see that sound has two principal characteristics: amplitude, measured in decibels, and pitch, measured in Hertz. Indeed, look at any mixing console and you’ll see these key parameters emblazoned across various points on a channel strip, but are you really sure what those parameters mean in relation to our daily recording/mixing activities?

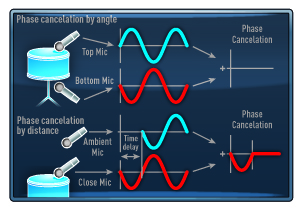

Two ways in which phase problems can be introduced into a recording: either in respect to the orientation of microphone capsules, or to the relative distance between them.

Amp It Up

Arguably one of the biggest misunderstandings in our daily workflow is the concept of the decibel and how it impacts on our actions – whether we’re raising a fader, or applying a compressor. The key issue is that decibels are often perceived as being a linear scale – a simple, scalable series of numbers that represents the relative amplitude of a signal. But things aren’t quite as straightforward as that!

The first point to note is that decibels actually represent a ratio of difference between two signals – it always needs a point of reference, in other words. This is perfectly illustrated on a mixing console, where we see a series of faders each marked between –inf at their lowest scale, 0dB about two-thirds of the way up the fader, and +10dB at the top of the fader. 0dB isn’t silence, therefore, but 0dB difference between the other channels on the console.

The next issue is one of scale: if we attenuate the fader by half, for example, do we halve the amplitude of the signal passing through it?

Rather than being a straight linear scale, therefore, decibels work to a logarithmic scale – in other words, the amplitude differences are multiplications of the initial signal level rather than incremental steps. The reasons for a logarithmic decibel scale are best explained by returning to the ear, and, in particular, our ability to perceive signals over a wide dynamic range. Mathematically speaking, this dynamic range is difficult to numerically represent in a linear fashion – a fader would have to be several metres long, for example, or you’d need numerical divisions that extend into the millions.

Decibels In The Real World

By being logarithmic, decibel modifications – either applied by a channel fader or a compressor, among other things – work in an interesting way. In effect, the initial movements are more graduated, dealing with relatively small increments (in relation to our full perception of dynamic range) but becoming increasingly dramatic as you move up or down the scale. What’s interesting is that this logarithmic scale is somewhat analogous to how we perceive dynamics – in short, the fader movements sound smooth and ‘linear’, but what’s actually being applied is logarithmic scaling.

Practically speaking, there are some important ‘magical’ numbers to note, all of which reinforce many actions you carry out on a daily basis. One is +/-6dB, which is the equivalent of doubling the signal amplitude, and is why +6dB is often the end-stop position of a fader. Divisions of 6dB – 3dB, 9dB or 12dB – are all multiplications of the basic signal level, which again is why these numbers pop up on a regular basis. Next time you apply 6dB of compression, therefore, you know that you’re effectively halving the output levels. Equally, it’s also easy to see why the small divisions around 0dB (+1 or -2dB, for example) still have a big impact on relative level, and why so much of a mix is in the +/-6dB range around 0dB.

Understanding how room acoustics work is an intrinsic part of recording practice

Hertz Rental

By comparison to dynamic range, the bandwidth of human hearing is relatively modest, from 20Hz at the lowest end of our hearing, extending to 20,000Hz at the upper limit of auditory perception. Unlike the logarithmic decibel, Hertz work as a straightforward linear scale, although our equipment has a slight bias towards handling frequency on a logarithmic basis. Look at a parametric EQ, for example, noting how a low-mid band works over a frequency range of around 1,000Hz (220Hz–1,200Hz) while the high-mid control has a greater range of 7,000Hz (1kHz–8kHz).

From an engineering perspective, Hertz largely impacts on the timbre of sound; more specifically, the harmonic series. Timbre, of course, allows us to differentiate between two sounds playing at the same pitch – a trumpet playing a concert A, for example, sounds different from an oboe playing the same note. The difference between the two instruments is defined by their harmonic structure, with each instrument having a unique series of partials or overtones over the fundamental frequency (or pitch) of the given note.

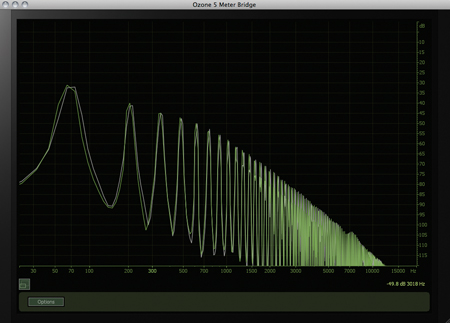

For a sound to be considered ‘musical’ its harmonic structure needs to be constructed from partials that are mathematically related to the fundamental. For example, a bass guitar playing G two octaves below middle C would have its fundamental around 100Hz, a second harmonic at 200Hz, a third harmonic at 300Hz and a fourth harmonic at 400Hz. What gives an instrument its colour, therefore, is the balance and amplitude of these additional harmonics. A bright sound, for example, will have an abundance of harmonics moving right up through the audio spectrum, whereas a purer sounds tends to exhibit just a few harmonics. Equally, you’ll also find a different propensity for even- or odd-ordered harmonics between instruments. A clarinet, for example, has a hollow sound due to its bias towards odd-ordered harmonics, whereas a full-bodied violin section has a good spread of even and odd harmonics

Decibels are a logarithmic scale in order to cover the vast dynamic range our ears hear over. This partially explains why faders don’t work in an entirely linear way

All Things Equal

As we’ll see later, harmonic structure is a recurring theme in the science behind recording, but fundamentally, it impacts on our approach to equalisation. While it’s easy to see an equalizer as an arbitrary ‘colouring’ device, it’s interesting to start more rigorously analysing your application of EQ in relation to the harmonic series.

An interesting point to note is the relatively limited range fundamental frequencies exist over – from around 27Hz on the lowest note of a piano to around 2kHz as the highest fundamental a flute can produce. Across the range of 27Hz to 2kHz, therefore, you’re largely dealing with fundamental frequencies, although, of course, the exact fundamental range will depend on the instrument you’re processing. Given that concert A is 440Hz, though, and that most instrumentation doesn’t extend more than a few octaves above or below this, most of your musical information resides between 100Hz and 1.5kHz.

Given our understanding of the placement of fundamental frequencies, it’s easy to see why the frequency range above 2kHz has so much do to with the colour of music. Between 2 and 6kHz, for example, we’ll see a large amount of second, third and fourth harmonics, which are often an important means of pushing an instrument forwards in a mix. Beyond 6kHz, we start to play with the upper harmonics of a sound, which will vary in density from instrument to instrument. Towards the upper end of the harmonic series, it’s also interesting to note how closely packed the harmonics become, which largely explains why we think of this area as being the ‘detail’ of the sound, and why boosting this frequency area adds to the vibrancy of a sound.

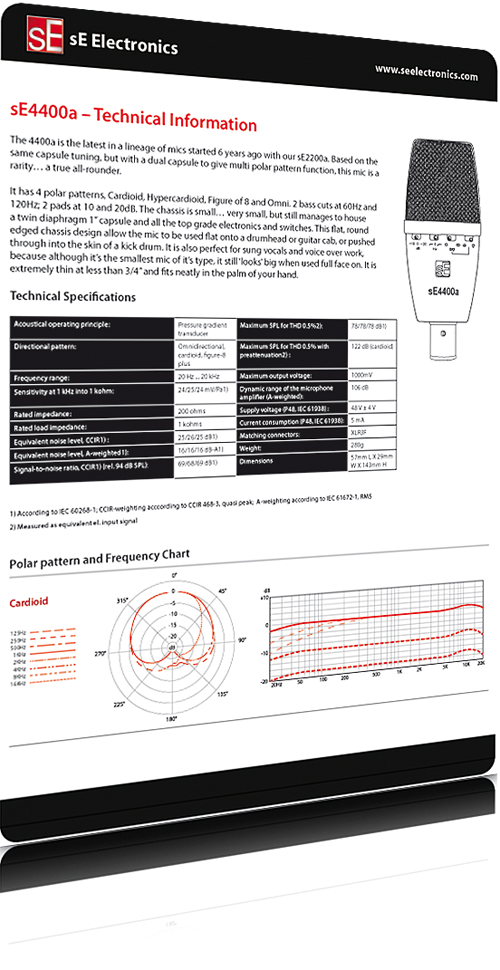

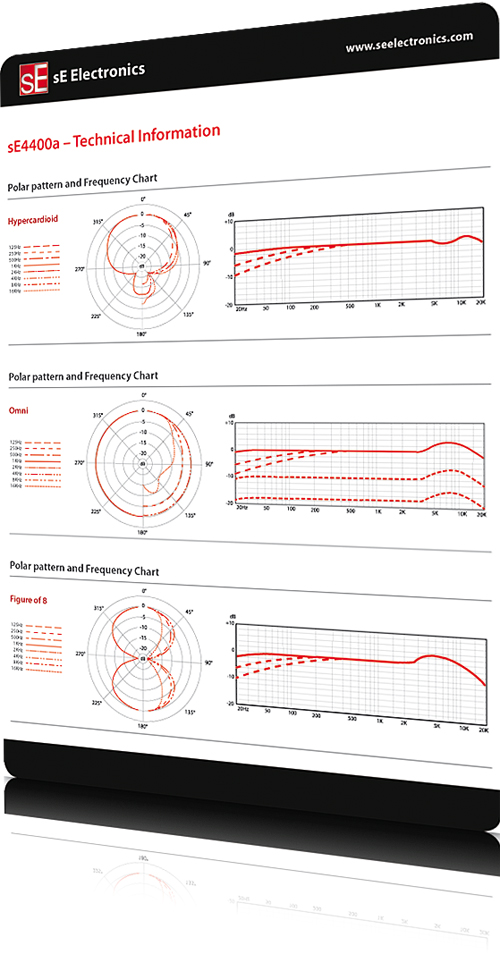

Spec sheets are a revealing insight into the technical and sonic performance of a microphone.

In Phase

Although pitch and amplitude are both defining ingredients of sound, there are other aspects we need to consider if we’re to fully understand its behaviour – including phase, the speed of sound (velocity, in other words) and auditory perception. One area that’s vitally important – but often woefully misunderstood – is phase. Put simply, phase relates to the positive and negative cycles of a waveform’s oscillations; to put it in acoustic terms, the phase when the air molecules are either compressing or rarefacting. By itself, the relative phase of a signal is largely irrelevant; however, if two signals are combined in such a way that they’re completely out-of-phase with one another the result is complete silence. The sounds cancel each other out, in other words.

To better understand phase it’s worth looking at a number of ways in which phase can creep into a recording in an unwanted way. One of the most obvious phase issues is created when a snare drum is recorded with two mics – one underneath it looking up, the other on top looking down. Bar the sonic differences between the top and bottom of the snare, the two signals are largely identical, although given that the capsules are facing in opposite directions, the two signals will have a completely different phase. When the signals are combined, we hear large amounts of phase cancellation – not complete silence, of course (there are still some inherent differences in the two sounds) but sufficient phase inconsistencies to weaken the overall effect.

The other way in which phase can create a problem is in relation to the distance between microphones capsules, coupled with the relatively slow speed of sound (around 340m/s). Again, think of a snare drum being recorded with two mics, but this time the second microphone is placed five metres from the first. Given the relatively slow speed of sound, the sound of the snare arrives at the two capsules at slightly different times. In theory, if the amount of delay is equal to half a wavecycle the two sounds will be completely out-of-phase with one another. Even if the phase cancellation is only partial, it’s highly likely that the combination of sources will produce a weaker overall sound.

Where phase problems exist, there are a number of remedies that can be applied. Simple phase-reversal, of course, should be an appropriate remedy when capsule are facing 180º from each other. For any time-based issues, though, it might be a case of using a tape measure and calculator, time-shifting a track by about 2.92ms (129 samples at 44.1kHz) for every metre of distance. Either way, these small differences can have a big impact on your overall sonic effectiveness, especially when you’re using multiple mics around the same sound source.

Timbre is formed by a series of harmonic overtones pitched over and above the fundamental frequency, and enables us to differentiate between two instruments playing at the same pitch

Directional Perception

As we’ve seen, it’s easy to become absorbed in the mechanics of sound, but it’s equally important to remember a whole additional layer in the equation – our auditory response to sound. We’ve already touched on some important issues – our logarithmic response to amplitude and the bandwidth of human hearing – but there are many other factors to consider in respect to our hearing, all of which have a direct impact on our working practices.

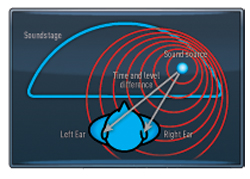

One of the most fundamental concepts of auditory perception is our ability to localise sound – to put it more specifically, our perception of stereo. As L/R stereo remains the principal format in which music is delivered, it makes sense to understand how we actually perceive it. In truth, our ability to localise sound is based on two guiding factors: level and phase. The level equation is easy to understand given the large chunk of meat and bone between our ears that acts as a sound absorber. In short, therefore, sounds on the right-hand side of the soundstage are masked from our left ear by virtue of our head being in the way!

However, the level-masking of our head isn’t as complete as we might first think. In truth, therefore, there’s still an amount of sound from the right-hand side of the soundstage reaching our left ear – although given the extra distance it’s covered, it will be slightly out-of-time with what’s arriving from the left. In conclusion, therefore, stereo perception is as much about phase differences between the left and right ears (brought about through the timing shifts) as it is about level differences between each ear.

Again, this sound theory gets interesting when we start applying it in a practical situation. The two principal stereo recording techniques sit to either side of the stereo perception debate – so that a coincident pair, for example, largely uses the directional characteristics of a cardioid microphone to capture level differences between the left and right of the soundstage, whereas a spaced pair of omnis largely works with phase differences between to the two sides of the soundstage. Ultimately, it’s a perfect illustration of the flaws and compromises that a recording needs to make (it is, after all, a form of illusion), and demonstrates how a more informed understanding of the mechanics can yield a more controlled and effective end result.

Loudness is a psychological response to sound, formed from an average of signal levels over time rather than instantaneous response to peak levels

The Beauty Of Numbers

Having explored both composition and sound engineering for a number of years now, it’s never been more apparent that music fundamentally works as the aural rationalisation of the beauty of numbers. To a large extent, the acoustic world that surround us is chaos (the noise of traffic, for example, or a thunder storm) with the role of a musician – or a sound engineer, for that matter – being to rationalise meaning and order from this madness. Even the most hardcore dubstep, for example, still seeks to tame the madness, even if the equation is as simple as imposing some basic, repetitive rhythmic structure.

While there’s some argument for a trail-and-error approach to finding the right answer (we have an innate enjoyment of harmony, for example, and a natural aversion to dissonance), there’s no doubt that a more informed understanding of both music and sound certainly helps in finding the best solutions in the shortest amount of time. Rather than killing creativity, therefore, the concepts and practices behind the behaviour of sound can empower us to produce better, more effective music. Arguably, the concepts we’ve covered are only the beginning of a voyage of discovery, but they illustrate how the science of sound provides a solid underpinning to the practices we carry out on a daily basis.

So, in the quest for producing better music, maybe the answer isn’t another plug-in, a more expensive synth or another microphone (although those things are always welcome, of course!), but instead, a more informed understanding of the medium we’re playing with. Although we all actively use terms such as decibels and Hertz on a daily basis, do we ever stop to think what they really mean? Like many aspects of our natural world, sound has an almost endless fascination to it, which, as sound engineers and musicians, we’re lucky enough to explore on a daily basis.

Our ability to localise a sound source – and hence our perception of stereo – is a result of both the level and timing differences of a signal reaching our ears.